What are internal links?

Internal Links are hyperlinks that time at (target) an equivalent domain because the domain that the link exists on (source). In layman’s terms, an interior link is one that points to a different page on an equivalent web site.

Code Sample <a href=”http://www.same-domain.com/” title=”Keyword Text”>Keyword Text</a>

Optimal Format

Use descriptive keywords in anchor text that provides a sense of the subject or keywords the supply page is making an attempt to focus on.

What is an interior Link?

Internal links are links that go from one page on a website to a special page on an equivalent domain. they’re ordinarily employed in main navigation. These form of links ar helpful for 3 reasons:

⦁ They permit users to navigate an internet site.

⦁ They facilitate establish info hierarchy for the given web site.

⦁ They facilitate unfold link equity (ranking power) around websites.

SEO Best apply

Internal links are most helpful for establishing website design and spreading link equity (URLs are essential). For this reason, this section is regarding building AN SEO-friendly website design with internal links.

On a private page, search engines ought to see content so as to list pages in their huge keyword–based indices. They conjointly ought to have access to a crawlable link structure—a structure that lets spiders browse the pathways of {a web site|an internet site|a web site}—in order to search out all of the pages on a website. (To get a peek into what your site’s link structure feels like, attempt running your website through Link someone.) many thousands of web sites build the vital mistake of activity or concealing their main link navigation in ways in which search engines cannot access. This hinders their ability to urge pages listed within the search engines’ indices. Below is AN illustration of however this drawback will happen:

In the example on top of, Google’s colourful spider has reached page “A” and sees internal links to pages “B” and “E.” but necessary pages C and D may be to the positioning, the spider has no thanks to reach them—or even understand they exist—because no direct, crawlable links purpose to those pages. As so much as Google thinks about, these pages primarily don’t exist–great content, smart keyword targeting, and sensible selling do not build any distinction the least bit if the spiders cannot reach those pages within the initial place.

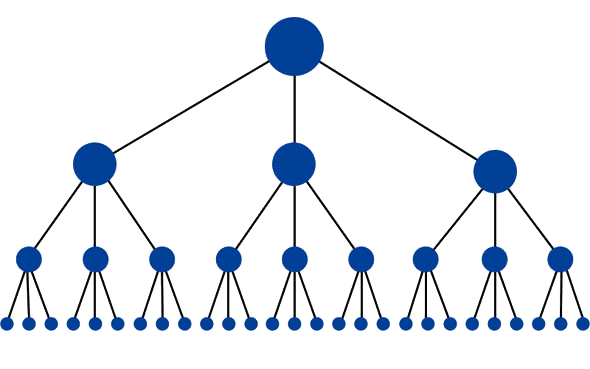

The best structure for an internet site would look just like a pyramid (where the large dot on the highest is homepage):

This structure has the minimum quantity of links attainable between the homepage and any given page. this can be useful as a result of it permits link equity (ranking power) to flow throughout the complete website, therefore increasing the ranking potential for every page. This structure is common on several high-performing websites (like Amazon.com) within the variety of class and subcategory systems.

But however is that this accomplished? the most effective thanks to do that is with internal links and supplementary address structures. as an example, they internally link to a page set at http://www.example.com/mammals… with the anchor text “cats.” Below is that the format for a properly formatted internal link. Imagine this link is on the domain jonwye.com.

In the on top of illustration, the “a” tag indicates the beginning of a link. Link tags will contain pictures, text, or alternative objects, all of which give a “clickable” space on the page that users will interact to maneuver to a different page. this can be the initial construct of the Internet: “hyperlinks.” The link referral location tells the browser—and the search engines—where the link points. during this example, the address http://www.jonwye.com is documented. Next, the visible portion of the link for guests, known as “anchor text” within the SEO world, describes the page the link is inform at. during this example, the page pointed to is regarding custom belts created by a person named Jon Wye, that the link uses the anchor text “Jon Wye’s bespoke Belts.” The tag closes the link, in order that components anon within the page won’t have the link attribute applied to them.

This is the foremost basic format of a link—and it’s eminently comprehendible to the search engines. The computer programme spiders understand that they must add this link to the engine’s link graph of the net, use it to calculate query-independent variables (like MozRank), and follow it to index the contents of the documented page.

Below are some common reasons why pages may not be accessible, and thus, might not be indexed.

- Links in Submission-Required Forms

Forms will embrace components as basic as a drop–down menu or components as advanced as a full–blown survey. In either case, search spiders won’t commit to “submit” forms and therefore, any content or links that may be accessible via a kind ar invisible to the engines.

- Links solely Accessible Through Internal Search Boxes

Spiders won’t commit to perform searches to search out content, and thus, it’s calculable that various pages ar hidden behind utterly inaccessible internal search box walls.

- Links in Un-Parseable Javascript

Links designed victimisation Javascript might either be uncrawlable or debased in weight looking on their implementation. For this reason, it’s suggested that customary hypertext markup language links ought to be used rather than Javascript based mostly links on any page wherever computer programme referred traffic is vital.

- Links in Flash, Java, or alternative Plug-Ins

Any links embedded within Flash, Java applets, and alternative plug-ins ar sometimes inaccessible to go looking engines.

Links inform to Pages Blocked by the Meta Robots Tag or Robots.txt

The Meta Robots tag and also the robots.txt file each permit a website owner to limit spider access to a page.

- Links on pages with a whole bunch or Thousands of Links

The search engines all have a rough crawl limit of a hundred and fifty links per page before they will stop spidering further pages coupled to from the initial page. This limit is somewhat versatile, and notably necessary pages might have upwards of two hundred or maybe 250 links followed, however generally apply, it’s wise limit the amount of links on any given page to a hundred and fifty or risk losing the power to possess further pages crawled.

- Links in Frames or I-Frames

Technically, links in each frames and I-Frames ar crawlable, however each gift structural problems for the engines in terms of organization and following. solely advanced users with a decent technical understanding of however search engines index and follow links in frames ought to use these components together with internal linking.

By avoiding these pitfalls, a webmaster will have clean, spiderable hypertext markup language links which will permit the spiders quick access to their content pages. Links will have further attributes applied to them, however the engines ignore nearly all of those, with the necessary exception of the rel=”nofollow” tag.

Want to urge a fast glimpse into your site’s indexation? Use a tool like Moz professional, Link someone, or Screaming Frog to run a website crawl. Then, compare the amount of pages the crawl turned up to the amount of pages listed once you run a site:search on Google.

Rel=”nofollow” will be used with the subsequent syntax: <a href=”/” rel=”nofollow”>nofollow this link</a>

In this example, by adding the rel=”nofollow” attribute to the link tag, the webmaster is telling the search engines that they are doing not wish this link to be understood as a traditional, juice passing, “editorial vote.” Nofollow happened as a way to assist stop machine-controlled journal comment, guestbook, and link injection spam, however has morphed over time into the simplest way of telling the engines to discount any link worth that may usually be passed. Links labeled with nofollow ar understood slightly otherwise by every of the engines.